Project 1: Albertine Rift Pipeline Analysis - Part 1

Project 1: Albertine Rift Pipeline Analysis - Part 1ScenarioGetting to work...1. Set up a project workspace2. Add and prepare provided data♦ Determining the projection of dataset with no defined coordinate system3. Searching for dataPublic Domain DatasetsDownload and prepare the GPW dataset for UgandaOn-line spatial data platformsArcGIS OnlineData Basin4. Prepare your data5. Execute your analysis6. Present your resultsDeliverablesSummary/What's next

Scenario

The African Conservation Organization (ACO) has just learned that the government of Uganda has plans to construct a new pipeline to deliver oil from a central processing facility south of Murchison Falls National Park to a refinery in the Kabale parish in Hoima, Uganda. Two potential routes have been proposed for the pipeline, and a hearing on the two options is being held in a few weeks. This is the ACO's only opportunity to voice any concerns about the biological impacts of each proposed route and present their recommendations.

The ACO has reached out to you to help evaluate the impacts of each proposed route on people, forests, and streams and suggest which route is best. They have been provided with a shapefile of the proposed pipelines, but with very little documentation. Also, the ACO has no available data on land cover, population, and hydrography so you will have to find what you can via public domain sources.

Once you have located and prepared the necessary spatial data, you'll need to determine for each route:

- The estimated number of people within 2.5 km of the proposed route.

- The total area (km2) of wetlands within 2.5 km of the proposed pipeline route.

- The total length in which the pipeline travels through an established protected area (km)

The best route will have the least area of wetland, the fewest people in the impact zone (2.5 km buffer), and will cross the fewest streams. Compile your findings and present a memo to the ACO with your recommendation based on your findings. Be sure to support your findings with effective maps.

Getting to work...

The analytical objectives here are pretty clear, but where you might find the data to do the analysis is not. In lecture, we have discussed some public domain datasets you might explore. You can also search some useful data clearinghouses like ArcGIS online and DataBasin.org. First, however, we should take inventory of what we have been given and get an idea of the region and spatial extent we are concerned with. So, we’ll begin by creating a workspace and preparing the data the ACO has provided.

1. Set up a project workspace

As mentioned in last week’s exercise, the first step in most any analysis is to create a workspace for your project. The steps below follow those same steps we did in that previous exercise.

- Open ArcGIS Pro and select

Create a new project. Set its name toACO_Pipelineand store it on yourV:drive, making sure theCreate new folder for this projectis checked. - Create a

DataandScratchfolder in this workspace, and create a scratch geodatabase in the scratch folder. - Set your workspace environment settings to point the

current workspaceandscratch workspaceto the Data and Scratch folders, respectively. (Analysis->Environments)

2. Add and prepare provided data

You are provided three shapefiles in the MurchisonPipeline.zip file. The first, Endpoints.shp, includes where the pipeline will start and end, namely the processing facility south of Murchison Falls and the refinery in Kabale parish. This dataset has a defined projection: UTM Zone 36 North, WGS 1984. However, the two pipeline route shapefiles do not, and we are left to guess what projection these files are in. We can never be certain that we’ll get the correct projection, but with some good detective work, we can sometimes become reasonably sure we got it.

- Unzip the contents of the

MurchisonPipeline.zipfile into yourDatafolder.

- Create a new map and add the

EndPoints.shpshapefile to your map.

- Add

Route1.shpto your map. Based on your knowledge of this dataset, it should appear as a line connecting the two points in theEndPoints.shpfeature class above. However, nothing appears!? - Suspecting a projection issue with the data, examine what coordinate system to which the

Route1.shpfeature class is referenced. You'll see it's not defined. Furthermore, no documentation exists suggesting what coordinate system the data should be defined as. We'll have to guess!

♦ Determining the projection of dataset with no defined coordinate system

Zoom to extent of the feature layer in question and look at the coordinate values shown at the bottom of the map view's window. [This technique works best if you first set the map's display units to meters!] This will give you a clue as to whether your dataset uses a geographic or projected coordinate system. If it’s geographic, then the values will be between -180 and 180 (possibly between 0 and 360) for X and between -90 and 90 for Y. If the numbers are much larger, the dataset likely uses projected coordinates.

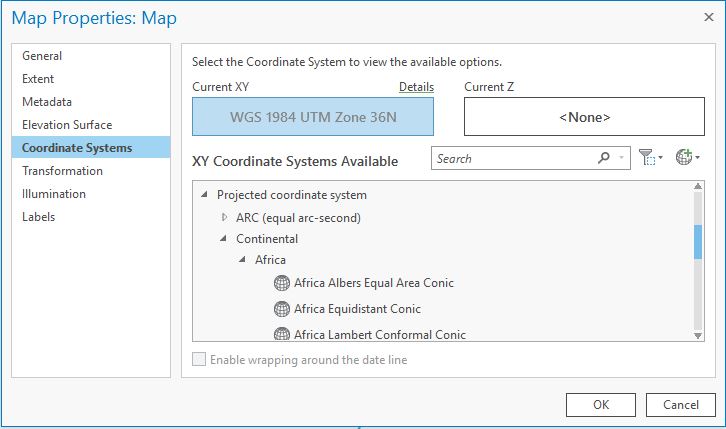

With a layer of a known coordinate system added to your map, change the coordinate system of your map view window (via the Map's properties). The

EndPoints.shplayer added to our map has a defined projection. This enables ArcGIS Pro to reproject these features on the fly. OurRoute1.shpfeature class has no defined projection, so ArcGIS will not attempt to reproject it; the features will assume the coordinates use whatever coordinate system the map uses. This allows us to guess at the coordinate system and see if we are right; if we are right, our feature will align with other features of known projections. So, let the guessing begin.Open the map's properties and select the

Coordinate Systemstab. We know we have a projected coordinate system from looking at the layer's coordinates (above), so expand theProjected Coordinate Systemssection. We still have a lot of options to choose from, and any information you can glean from your data source will be helpful in narrowing the choices. Unfortunately, we have nothing to go on – but we do know our dataset is in Africa. Let’s expand Continental and select Africa:Try

Africa Albers Equal Area Conicand select OK. You may have to zoom to theRoute1layer. It appears close, but not quite dead on it since the start and end should match the points in theEndPointslayer.Go back to the map's properties and try

Africa Equidistance Conic. How do the line features in theRoute1layer match up now? Looks like we have a winner! Sometimes this method of guessing at an undefined projection is all you can do to use the data. It can be handy, but it comes with some risk – you may have arrived at what looks to be the correct coordinate system, but actually isn’t (e.g. NAD 27 and NAD 83 based systems are very close, but not exactly the same). It’s always useful to try to find documentation on the data’s coordinate system. But when you just need to move on, you should ALWAYS acknowledge that you guessed at the coordinate system when submitting your result.

Now, add

Route2.shpto your map. This dataset also has no defined coordinate system. See if you can figure it out and then project this dataset to match theEndpoints.shpfeature class too. If you get too stuck, ask an instructor, a TA, or a fellow student to see how they found it (and try to figure out how they got it and you didn’t) – but don’t waste an inordinate amount of time guessing.

At the end of this step, you should now have a proper workspace with three feature classes sharing the same coordinate system. While it’s not necessary to have all input datasets having the same projection, it will greatly reduce the risk of confusion and even error in subsequent analyses. We now also have a rough idea of the extent to which we need to search for the other required datasets: population, protected areas, and wetlands.

3. Searching for data

Like finding the correct coordinate system for a dataset with limited or no metadata, searching for data is also something of a wild goose chase. There’s no correct way to locate the exact data you might need. Rather, you need familiarity with what datasets might be out there, awareness of useful up-to-date data portals, and a strategy for general web searches. Your search can take a variety of directions. You may want to begin by looking for what datasets might be available for the geographic region of your analysis (e.g. “Albertine Rift” or “Uganda”). Or, you may want to start by looking for data by theme (e.g. “wetlands”, “protected areas”). Again, there’s no correct answer and, unfortunately, there’s a degree of luck involved.

Here, we’ll begin our search for data together. It’s quite possible you’ll find a more recent, more accurate, more precise dataset on your own, and if you do feel free to use it in your analysis. Recall, however, that we have a limited budget to get our work done in this case, so you need to get the feel for when your continued data search starts to be more effort than its worth. With that in mind, let’s dive in! First, we’ll review what public domain datasets might be useful, and then we’ll scout out some of the more promising geospatial portals.

Public Domain Datasets

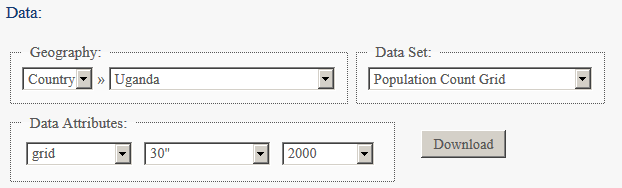

In lecture, we reviewed several public domain datasets that may be useful here. Most of these (i.e. the non-US ones) were global, so we know our study extent would be included. The questions we then have to ask are: are these data resolute enough (spatially and thematically) to serve our purpose? Are they recent enough? Sifting through these would be a good place to start, and we’ll go ahead and tackle our first dataset – population – using the public domain dataset of population provided by SEDAC, i.e. the Global Rural-Urban Mapping Project, or GRUMP (v1).

Download and prepare the GPW dataset for Uganda

- Start at SEDAC’s main web page: http://sedac.ciesin.columbia.edu/

- Register/log in to the site (required to download data).

- Find the link to browse their data collections and find GRUMPv1.

- Decide whether you population count or population density is more appropriate.

- Download the dataset for Uganda. Data for the year 2000 is the most recent, so use that.

- Add the data to your map.

Note that it’s not always clear cut and rarely are two download sites ever the same. You need to learn how to navigate these sites. And yes, sometimes you need to make an account and log on before you can download the data you want…

Also keep in mind potential bias a data provider might have along with other limitations of the data. Remember: if you don’t have a firm grasp of the analog data on which a digital dataset is based, you may be providing your client with a misleading result.

On-line spatial data platforms

As you’ve just seen, SEDAC provides many more datasets than just the GRUMP population data. It’s useful to take note of that, i.e. add it to your mental or physical list of sites to visit when searching for useful or relevant datasets. Now, however, we’ll turn our attention to on-line resources whose purpose extends beyond just hosting spatial data and more into allowing users to search across a broad spectrum of data providers for specific datasets. We’ll examine two of the most useful data platforms currently available: ArcGIS Online and Data Basin.

ArcGIS Online

Recognizing that finding data is a challenge, ESRI has made significant efforts to facilitate data discovery. These efforts are still evolving, but with ArcGIS Pro ESRI has integrated a powerful data search utility directly within it desktop application – though the same catalog is available on-line via a web-browser. This is referred to as ArcGIS Online, and can be hugely help in searching for data. To access the service from within ArcGIS, activate the Catalog frame and select the Portal tab. Finally, select the All Portal "cloud" icon so we search the entire ArcGIS Catalog collection.

Searching for data with ArcGIS Online can be done by topic, by geography, or sometimes both. We’ll see how ArcGIS Online can help us find a good wetlands dataset for our analysis.

- Try first to search for a good wetlands dataset by using “wetlands” as your search term. If you see any potentially useful datasets, you can click the details box for more details. One important attribute to note is the type of data being shared, e.g. map service, web map service (WMS), feature service, layer package, etc. These dictate how you can use the data in your analysis: some types of data can only be viewed in your map while others allow you to perform analyses with the features.

Did you find any useful wetlands datasets by searching globally for “wetlands”? Try adding one or a few to your map. Open up the dataset’s properties in ArcMap. What can you tell about the dataset? Was anything saved to your computer? Where?

- Next, try searching geographically. Type in “Albertine Rift” as your search term. Too narrow perhaps? Let’s broaden it; try “Uganda”. Scroll down to see if there are any useful wetland datasets in the search results. Or, you could search for “Uganda wetlands”.

- Find and add a few promising wetland datasets to your map. Investigate the datasets: are they raster or vector data? Can you add the dataset to a geoprocessing model?

Data Basin

Data Basin (http://databasin.org/) is another excellent resource for finding spatial data. You may have actually found a wetlands dataset via ArcGIS Online that was hosted at Data Basin! We’ll use Data Basin to search for a dataset of protected areas in the Albertine Rift area. Before searching, however, you should register yourself with Data Basin. It’s free and it’s required to download many datasets from the site.

Once you are registered and log in, search for protected areas in Uganda. Again, you could begin your search by theme (“protected areas”), by geography (“Albertine Rift”, “Murchison Falls”, “Uganda”), or both.

Once you’ve executed your search, the results can take many forms. Some returns may be downloadable datasets, some may just be maps (or map services that you can display on-line or sometimes in ArcGIS), and others may be documents or figures. You can also sort your results by relevance, by name, or creation date.

For consistency sake, let’s select the Uganda protected areas dataset provided by the Conservation Biology Institute for our exercise: http://databasin.org/datasets/c5ef5cca827a42de87f7bce418e52bcb .

At this page, you’ll see it’s a Layer Package and that there’s a variety of options for what you can do with the dataset. Specifically, you’ll see you can download it as a Layer Package or as a Zip file. Both can easily be displayed and used in ArcMap, but I recommend downloading the Zip file. A layer package is itself a zipped file and when you download that, you’ll have to decompress it twice.

Download the zip file, uncompress it and add the layer to your map. You now have all the data necessary to complete your assigned task! We are ready to move onto the next step – preparing the data for analysis.

4. Prepare your data

Once you’ve found and obtained the data required to do your analysis, you may still want or need to prepare the data for analysis. Like the pipeline route data, you may need to define the dataset’s coordinate system and also project the data to match the coordinate system of your other datasets. Depending on the analysis, you may also want to convert vector data to raster, or if it’s already raster data, resample the data so that the cell size matches other datasets. You may also want to create spatial subsets of datasets that extend far beyond your area of interest so that your workspace consumes disk space more efficiently.

The exact preparations needed in a given situation depend on the requirements of your analysis and the format of other datasets. What you should do each time, however, is keep a log of any significant changes you’ve made to the original data. This is best done by updating the metadata attached to these datasets. However, if you modified the data using a geoprocessing model, you could also refer to that model as a clear guide to what changes you’ve made to the data in preparation for analysis.

5. Execute your analysis

Now that we have the data required to perform our analysis, the next step is, of course, to execute the analysis. In this case, the analysis is relatively straightforward: you simply need to calculate the values of the three questions asked for the two pipeline scenarios. The actual values you get, however, may vary depending on the datasets you chose, and it’s your responsibility to be as transparent as possible regarding any decisions you made that may affect the results.

I strongly suggest that you perform all your analysis by building a geoprocessing model. This is useful for at least two important reasons. First, it provides an excellent outline of your analysis that can be reviewed and perhaps revised later if needed. Also, since our analysis is repetitive (same steps for two different pipeline routes), you can just update the pipeline feature class and rerun your model to get your results!

6. Present your results

We will revisit different ways in which you can present your results later, but for now do your best at answering the questions asked, restated here for convenience:

For each pipeline route, provide the following:

- The estimated number of people within 2.5 km of the proposed route.

- The total area (km2) of wetlands within 2.5 km of the proposed pipeline route.

- The total length in which the pipeline travels through an established protected area (km)

Deliverables

When you have completed your analysis, tidy up your workspace (e.g. remove any temporary files, and update documentation where necessary) and compile your results in a short document that you’d submit to the African Conservation Organization.

Summary/What's next

At the end of this exercise, you should have more confidence in your ability to find data to perform a specific geospatial analysis. You should also have a keener appreciation that finding data is not always an easy or straightforward task, but that with some basic knowledge of general public domain datasets, some key on-line data platforms, and some search know-how, you can learn to become more efficient and more successful in your search for valuable geospatial data.

While in future lab exercises in this class, the data will be provided for the most part, you will likely need to search for data at some point – your course project for example. In Part 2 of this lab, we’ll continue with this project, discussing more about how communicating your results can be nearly as important as knowing how to produce the results in the first place.